We need to read this directory and record the information of all files into table.

The information - File Name, Created Date, Modified Date, File Type, File Path, size etc.

2. If file is updated, the status will sync to database.

For example, if the file A has been modified, the modified date will be a new date. When we run package, this file's information will be updated in database with new file size, modified date etc.

3. If file is deleted, database will sync this information and won't show the file's information.

3. If a new file is added, database will sync this information and show the new file's information.

4. Also need to record historical information about files.

1. Make sure you have created these tables in demo database - SSISDemoDB.

USE SSISDemoDB

GO

IF OBJECT_ID('DocumentTypeMapping')

IS NOT NULL

DROP TABLE DocumentTypeMapping

CREATE TABLE DocumentTypeMapping

(

ID INT IDENTITY(1,1) PRIMARY KEY,

FileExtension NVARCHAR(50) NOT NULL,

FileTypeDescription NVARCHAR(100) NOT NULL

)

INSERT INTO DocumentTypeMapping VALUES

('dtsx','Integration

Services Package'),

('database','Analysis

Services Database'),

('dtproj','Integration

Services project file'),

('user','Visual

Studio Project User Options file'),

('dtsx','Integration

Services Package'),

('txt','Text

Document'),

('xlsx','Microsoft

Excel Worksheet'),

('xlsx','Microsoft

Excel Worksheet') ,

('vsd','Microsoft

Visio Drawing'),

('doc','Microsoft

Word Document 2003-2007 ')

IF OBJECT_ID('DocumentsStaging') IS NOT NULL

DROP TABLE DocumentsStaging

CREATE TABLE DocumentsStaging

(

ID INT IDENTITY(1,1) PRIMARY KEY,

NAME NVARCHAR(500) NOT NULL,

FullPath NVARCHAR(2000) NOT NULL,

CreatedDate DATETIME NOT NULL,

FirstModifiedDate DATETIME NOT NULL,

NewModifiedDate DATETIME NOT NULL,

FileType NVARCHAR(200) NOT NULL,

Size BIGINT NOT NULL,

BatchInsertTime DATETIME DEFAULT GETDATE() NOT NULL

)

IF OBJECT_ID('Documents') IS NOT NULL

DROP TABLE Documents

CREATE TABLE Documents

(

ID INT IDENTITY(1,1) PRIMARY KEY,

NAME NVARCHAR(500) NOT NULL,

FullPath NVARCHAR(2000) NOT NULL,

CreatedDate DATETIME NOT NULL,

ModifiedDate DATETIME NOT NULL,

FileType NVARCHAR(200) NOT NULL,

Size INT NOT NULL,

Operation NVARCHAR(50) NOT NULL

)

IF OBJECT_ID('DocumentsErrorExtension')

IS NOT NULL

DROP TABLE DocumentsErrorExtension

CREATE TABLE DocumentsErrorExtension

(

ID INT IDENTITY(1,1) PRIMARY KEY,

NAME NVARCHAR(500) NOT NULL,

FullPath NVARCHAR(2000) NOT NULL,

FileType NVARCHAR(200) NOT NULL,

)

Descriptions -

Table DocumentTypeMapping shows the description of specified file, usually we get file with its extension like - .doc , .txt, .ppt. We won't show user such information because the use may not understand what does '.ppt' or 'prot' mean, so configure this mapping before you composed your file processing package.

Table DocumentsStaging - It mostly like a historical table which will record all documents information in historically loading files from specified directory.

For example, the first time we load folder 'Test', there're A,B,C file in this folder, we record these information when the first time we run the package. But at the second time when we run this package, we only find file B inside and a new file D.

Then we will record like this -

ID, File Name, BatchInsertTime

1 A 2011

2 B 2011

3 C 2011

4 B 2012

5 D 2012

Table Documents will only show the current existing files in specified folder.

So the first time when load files, it will show -

ID, File Name, BatchInsertTime

1 A 2011

2 B 2011

3 C 2011

The second time it will show -

ID, File Name, BatchInsertTime

1 B 2012

2 D 2012

Because system will think the file A and C have been deleted by user or their name has been changed.

So if use want a report to show files information, table DocumentsStaging can show the history and table Documents can show current information of files.

Table DocumentsErrorExtension will record all mismatched file type. For example, there's no description for file extension - '.mdb'. If package process a new file with file extension .mdb, the information the this file won't be recorded in table Documents, it will be recored in DocumentsErrorExtension to let system admin know this file doesn't has a file type description, we need to insert a description for this file first.

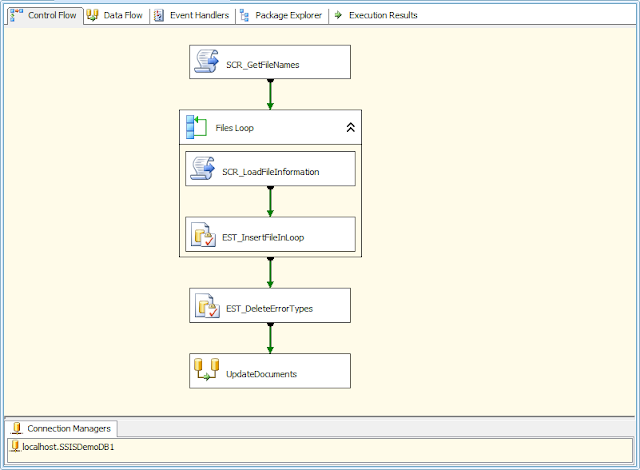

SSIS Framework

Control Flow -

Data Flow -

Package Variables -

Steps:

1. Create a package 'CH03_SyncDocuments' and defined package variables as above figure.

Package variable - Files. It will be used to store all file names from specified package variable - Directory.

Please assign your custom file folder path to 'Directory'

2. Drag a script component to Control Flow Pane, name it as 'SRC_GetFileNames', click to open it.

ReadOnlyVariables - Directory (We have assigned a value to this variable in step 1)

ReadWriteVariables - Files (All file names will be saved in this variable)

Click Edit Script and import System.IO in default class, add such codes.

public void Main()

{

//Get the directory from user defined variable

string directory = Dts.Variables["User::Directory"].Value.ToString();

//Get all file names in specified directory

string[] fileNames = Directory.GetFiles(directory);

//Save to user defined variable

Dts.Variables["User::Files"].Value

= fileNames;

Dts.TaskResult = (int)ScriptResults.Success;

}

Save these changes and run package to make sure if can you see a green status of component. If script component becomes green, that means we can load all file names correctly from specified folder.

We often do this test when we finished a configuration to each component.

3. Drag a loop foreach container as figure shows (Files Loop):

User::FilePath's value is from User::Files in a loop.

4. Drag a script component with name 'SCR_LoadFileInformation' into loop container, edit this component

We use User::FilePath to load a file and read all properties of this file.

ReadWriteVariables are variables which will save all loaded properties from a file.

Edit script, import System.IO and try to understand such code inside -

public void Main()

{

string

fileName = Dts.Variables["User::FilePath"].Value.ToString();

FileInfo file = new FileInfo(fileName);

Dts.Variables["User::Name"].Value

= file.Name;

Dts.Variables["User::CreatedDate"].Value

= file.CreationTime;

Dts.Variables["User::ModifiedDate"].Value

= file.LastWriteTime;

Dts.Variables["User::FileType"].Value

= file.Extension.Replace('.',' ').Trim();

Dts.Variables["User::Size"].Value

= (int)(file.Length/1024);

Dts.Variables["User::FilePath"].Value

= file.FullName;

Dts.TaskResult = (int)ScriptResults.Success;

}

How to pass a package variables and how to assign value to package variables ? You should know the answers.

Run this package, you should make sure all components are green finally.

5. In loop container, add Execute SQL Task with name EST_InsertFileInLoop, edit it.

You should know how to build a OLE DB connection, I have mentioned it in previous posts.

For SQL Statement, we need to build a SQL to insert all document information to a staging table, the parameter will use ? to instead of the real value, we will configure parameters in 'Parameter Mapping'.

We need to know which values we should to insert, then map them like below figure shows:

CREATE TABLE DocumentsStaging

(

ID INT IDENTITY(1,1) PRIMARY KEY,

NAME NVARCHAR(500) NOT NULL, -- User::Name

FullPath NVARCHAR(2000) NOT NULL, -- User::FilePath

CreatedDate DATETIME NOT NULL, -- User::CreatedDate

FirstModifiedDate DATETIME NOT NULL, -- User::ModifiedDate

NewModifiedDate

DATETIME NOT NULL, -- User::ModifiedDate

FileType NVARCHAR(200) NOT NULL, -- User::FileType

Size BIGINT NOT NULL, -- User::Size (KB)

BatchInsertTime DATETIME DEFAULT GETDATE() NOT NULL -- System Variable: System::StartTime

)

FirstModifiedDate DATETIME NOT NULL, -- User::ModifiedDate

NewModifiedDate DATETIME NOT NULL, -- User::ModifiedDate

- In Windows OS, we use C# can easily get a file's properties like created date and modified date.

- For example, I created a file on 05/01/2011. Then I updated this file with new contents on 06/01/2011.

- So the created date property of this file is - 05/01/2011, the modified date property is 06/01/2011

- But for this demo, we need to know which files are new added, which files are new updated, the basis is we judge if the created date is same as modified date. If created date = modified date, this file is new added, if created date <> modified date, we think this file has been updated.

- The problem for this demo is, if we load this file on 07/01/2011 at the first time, what conclusion we should make ? Is is a updated file or a new file. Actually it should be a new file to us, it may be edited several times before our package load it.

- So I design two columns in tables, both columns store the value of Last Access Time of the file. The FirstModifiedDate doesn't mean the real first update time (06/01/2011) but means the last modified time when loading this file to staging table.

BatchInsertTime DATETIME DEFAULT GETDATE() NOT NULL -- System Variable: System::StartTime

- We need to record which files are loaded per every time we run this package.

You should understand the above steps doing:

- Get all file names and save to an object which is used in loop container.

- Each loop, we can use a file name to load a file and get properties of this file. All properties of a file are save in different package variables.

- Map these package variables and compose SQL with these variable to insert a file into staging table.

- When the loop ends, the information of all files are recorded.

6. Build Execute SQL Task to delete error extension information and add a data flow task to process the main sync logic.

Execute SQL Task - EST_DeleteErrorType

Data Flow Task - UpdateDocuments

Edit EST_DeleteErrorTypes with existing OLE DB connection.

SQL Statement -

DELETE FROM dbo.DocumentsErrorExtension

WHERE FileType IN(

SELECT DISTINCT

FileType

FROM dbo.DocumentsStaging

)

The component is used to delete all error information from DocumentErrorExtension. If the file type of a file doesn't map to mapping table, the information will be recorded in DocumentErrorExtension.

Go into data flow task - click 'UpdateDocuments', we 're going to design data flow task to sync documents.

7. Please see the data flow framework again.

The main logic is -

- Get last batch documents from staging table to look up documents table.

- NO Match - The current file doesn't exist in documents table, then insert new file information to documents (Also need to compare the file type, if the file type doesn't exist in file type mapping table, it will redirect to error extension table).

- Match - The current file exists in documents table, need to update document information in document table.

8. Add OLE DB Source in data flow, new name is 'OLE_SRC_GetStaging', edit it.

Getting the last batch of files from staging table as a source.

SELECT *

FROM dbo.DocumentsStaging

WHERE

BatchInsertTime = (SELECT MAX(BatchInsertTime) FROM dbo.DocumentsStaging)

Pass all columns to next component.

9. Add a look up component - 'LKP_LookUpDocuments', edit this component.

Choose 'Redirect rows to no match output'

Use 'Document' table as a look up table.

Available Input columns are from 'OLE_SRC_GetStaging', please see Step 8.

Available Lookup Columns are from table Documents.

Simply to say, there're two tables - DocumentsStaging and Documents. Just like the join in SQL, we use NAME and CreatedDate to see if files in staging exist in Documents.

There're two results here, Matched and NON-Matched, so two outputs are also generated in this component - Matched Output and NON-Matched Output, these outputs can be a source for next data flow component.

Drag 'Name' in input column to the 'Name' in lookup columns, same operation to CreatedDate.

10. For No Match Output, we're going to insert a new record. But we need to check if the file type exist in mapping table first.

Drag a lookup component 'LKP_Look Up File Extension' and tie to 'LKP_LookUpDocuments', choose 'Lookup No Match Output' when message box shows up.

Drag a OLE DB Components CMD_UpdateDocuments' and tie to 'LKP_LookUpDocuments', choose 'Lookup Match Output' when message box shows up.

Need to understand the source of Matched and Non-Matched components.

For Non Matched component 'LKP_Look Up File Extension', its source columns are from the original source 'OLE_SRC_GetStaging'.

For Matched component 'CMD_UpdateDocuments', its source columns are from the join columns of 'OLE_SRC_GetStaging' and 'LKP_LookUpDocuments' base on 'Name' and 'CreatedDate'.

11. Edit LKP_Look Up File Extension

Choose look up table - DocumentTypeMapping

File Type in input columns maps to File Extension in look up columns.

12. If mapped, that means the file type from staging table is correct type, otherwise, it should be incorrect file type which haven't been recorded in file mapping table.

13. If mapped, we get those records from staging table with the file type description and insert into table Documents.

Drag a Derived Column component ''DC_InsertOperation' and tie to 'LKP_Look Up File Extension' by using 'Lookup Match Output', edit it.

A new column 'Operation' will be built with a value 'NEW', this column will be added into up stream input columns and will be passed together to next component as a source.

14. Add an OLE DB Destination component 'DEST_InsertNewDocuments' to connect to ''DC_InsertOperation', edit it.

Do mapping.

15. Drag another component 'DEST_NoMatchedFileExtension', edit it.

16. CMD_UpdateDocuments

OLE DB Connection

Edit SQL Command

UPDATE dbo.Documents

SET FullPath = ?,

ModifiedDate =

?,

Size =

?,

Operation =

'UPDATE'

WHERE NAME = ?

AND FileType =

?

AND CreatedDate =

?

AND UPPER(Operation) = 'NEW'

AND

ModifiedDate <> ?

17. CMD_DeleteNONExistDoc

DELETE FROM dbo.Documents

WHERE Name NOT IN(

SELECT NAME FROM

dbo.DocumentsStaging

WHERE BatchInsertTime =

(

SELECT MAX(BatchInsertTime)

FROM dbo.DocumentsStaging

)

)

18. Testing

19. Add a new file

20. Update contents.

Configurations!

Please let me know if you have any questions about this blog.

Contact to me by email - simonlvpin@yahoo.com

Or Skype - simonlvpin